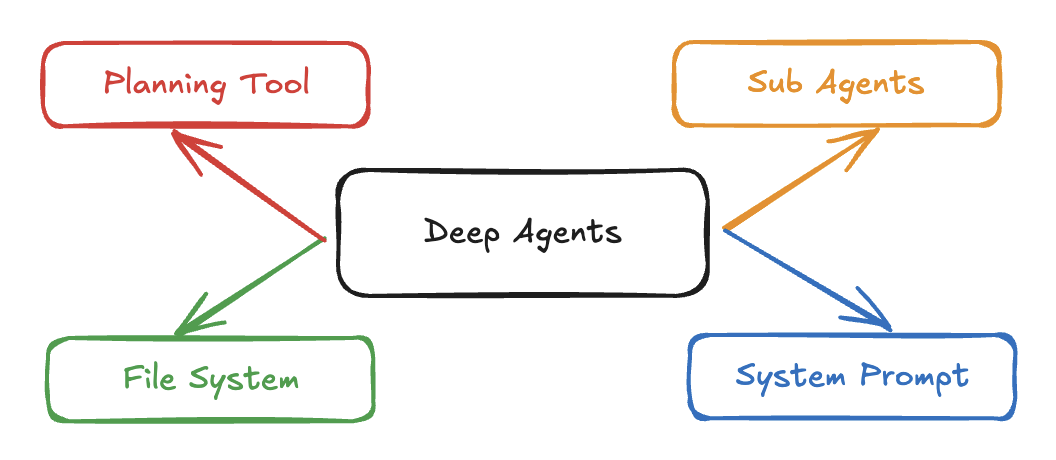

DeepAgents is a Python framework for building advanced AI agents capable of planning, hierarchical delegation, and file persistence through a middleware-based architecture. Built on LangGraph Workflows and LangChain, it addresses the limitation of “shallow” agents that simply call tools in a loop without sophisticated planning or multi-step coordination for complex tasks.

The framework emerged from recognizing that basic tool-calling loops fail on longer, more complex tasks requiring decomposition, parallel exploration, and state management across multiple conversation turns. DeepAgents provides infrastructure for agents that can plan multi-step tasks, delegate to specialized sub-agents, maintain virtual filesystems for intermediate results, and layer system prompts for sophisticated guidance.

Four Pillars Architecture

Explicit Over Implicit Planning

Externalizing agent plans into visible task lists (rather than relying on implicit model reasoning) transforms debugging from impossible to tractable—you can inspect what the agent intends, not just what it does.

DeepAgents rests on four foundational capabilities that distinguish it from simpler agent frameworks:

Planning Tool: Agents maintain explicit task lists through the write_todos tool, tracking multi-step workflows with status management (pending, in-progress, completed). The planning capability connects to broader patterns in Multi-Agent Research Systems where decomposition and tracking of subtasks proves essential for complex research.

Sub-Agent System: Hierarchical delegation enables spawning specialized agents for focused subtasks with context isolation. The main agent delegates work through the task tool, which creates sub-agents that execute independently with their own context windows. This implements the Orchestrator-Worker Pattern where a coordinator decomposes problems while workers execute specialized tasks in parallel.

Virtual File System: An in-memory filesystem provides persistence for intermediate results without disk I/O. Agents use ls, read_file, write_file, and edit_file tools to manage state across multiple steps. This virtual persistence enables agents to accumulate findings, store partial results, and maintain context across long-running tasks without external storage dependencies.

Detailed System Prompt: Layered prompts combine user instructions with base guidance and middleware-specific instructions. This prompt architecture ensures agents understand how to use planning tools, when to delegate to sub-agents, and how to manage context effectively. The layering enables extending agent capabilities through middleware without rewriting base prompts.

Middleware Architecture

The middleware stack is DeepAgents’ core extensibility mechanism, assembled in deterministic order to inject tools, state, and prompt modifications. Each middleware component can extend the agent state schema, provide tools, and modify system prompts before LLM invocation.

The default stack executes in this order:

PlanningMiddleware: Adds write_todos tool and extends state with todos: List[Todo] tracking task status. System prompt modifications guide agents to create task lists for multi-step work and mark items completed as they progress.

FilesystemMiddleware: Adds file operation tools (ls, read_file, write_file, edit_file) and extends state with files: Dict[str, str] mapping filenames to content. This virtual filesystem persists between agent calls through state management.

SubAgentMiddleware: Adds task tool for delegating to sub-agents and manages the agent registry. This middleware enables hierarchical task decomposition where the main agent spawns specialized sub-agents for focused exploration.

SummarizationMiddleware: Automatically compresses conversation history to prevent Context Rot. Long conversation threads get summarized periodically, maintaining essential context while preventing exponential token growth. This implements Reducing Context strategies automatically.

AnthropicPromptCachingMiddleware: Enables prompt caching with 5-minute TTL for repeated system prompt prefixes. This reduces latency and cost when agents make multiple LLM calls with similar context, particularly valuable for iterative research workflows.

HumanInTheLoopMiddleware: Conditionally added when tool_configs parameter specifies which tools require human approval. This enables interrupt-based workflows where agents propose actions but humans approve them before execution.

Custom middleware can be appended to this stack, enabling domain-specific extensions without modifying core framework code. The deterministic ordering ensures predictable behavior where middleware components can rely on earlier middleware having configured expected state and tools.

Sub-Agent Delegation Patterns

Context Isolation Paradox

Sub-agents intentionally receive only task descriptions—not the main agent’s full conversation history—preventing exponential context growth at the cost of potential information loss.

The sub-agent system enables hierarchical task decomposition through three delegation patterns:

General-Purpose Subagent: Always available through the task tool, inheriting all tools from the main agent. This default sub-agent uses the same instructions as the main agent, providing basic delegation capability without configuration.

Custom Subagents (SubAgent): Defined via the subagents parameter with specialized prompts, tool subsets, and optional custom models. These enable creating focused agents for specific domains - a research sub-agent with search tools, an analysis sub-agent with data processing tools, a writing sub-agent with summarization tools.

Custom Graph Subagents (CustomSubAgent): Pre-built LangGraph agents that bypass the default middleware stack entirely. This pattern enables integrating existing agent implementations or implementing radically different agent architectures as composable units.

Virtual Filesystem as Communication Channel

The shared filesystem enables sub-agents to write detailed files while returning brief summaries, creating a dual-channel communication pattern that balances context efficiency with information richness.

File system state merges between agents - sub-agents can write files that persist in the main agent’s filesystem. A research sub-agent might write detailed findings to files while returning a brief summary, enabling the main agent to access full detail if needed.

State Management

DeepAgents uses a hierarchical state schema extending LangChain’s AgentState:

class Todo(TypedDict):

task: str

status: Literal["pending", "in_progress", "completed"]

class PlanningState(AgentState):

todos: Annotated[List[Todo], todo_reducer]

class FilesystemState(AgentState):

files: Annotated[Dict[str, str], file_reducer]

class DeepAgentState(PlanningState, FilesystemState):

passThe state architecture provides isolated namespaces for different capabilities. Planning state tracks task progression, filesystem state manages virtual files, and the combined DeepAgentState merges both. Custom middleware can extend the schema further for domain-specific state requirements.

State reducers control how updates merge across agent turns. The file_reducer merges file dictionaries, enabling sub-agents to add files without conflicting with existing files. The todo_reducer manages task list updates, handling status changes and new task additions.

State Separation Architecture

Separating concerns into typed state schemas (todos for planning, files for results, messages for conversation) outperforms keeping everything in conversation history, enabling targeted context management without parsing unstructured text.

LangGraph checkpointers enable state persistence across sessions. Agents can checkpoint state after each turn, supporting long-running tasks that span multiple user interactions, human-in-the-loop workflows that pause for approval, and failure recovery that resumes from the last successful checkpoint.

This state management connects to patterns in Context Engineering where explicit state schemas enable sophisticated context control.

Built-in Tools

DeepAgents provides six core tools through middleware:

write_todos: Creates or updates task lists with status tracking. Agents use this for planning multi-step workflows, making their intended approach explicit before execution.

ls: Lists files in the virtual filesystem, showing what intermediate results have been stored.

read_file: Retrieves file content with pagination support for large files, preventing context overflow from verbose results.

write_file: Creates or overwrites files in the virtual filesystem, enabling agents to persist findings, partial results, or structured data.

edit_file: Modifies files through string replacement, supporting iterative refinement of results without rewriting entire files.

task: Delegates subtasks to sub-agents by name, providing the hierarchical delegation capability. The tool accepts a sub-agent identifier and task description, returning the sub-agent’s result.

These tools implement common patterns from Multi-Agent Research Systems - planning through explicit task lists, intermediate result storage through files, hierarchical delegation through sub-agents. The tools create a standard vocabulary for complex agent behaviors that would otherwise require custom implementation.

Agent Creation and Configuration

Two functions create DeepAgents:

agent = create_deep_agent(

tools=[internet_search, custom_tool],

instructions="You are an expert researcher conducting thorough investigations.",

model="claude-sonnet-4-20250514", # Optional, defaults to Claude Sonnet 4

subagents=[research_subagent], # Optional custom sub-agents

middleware=[custom_middleware], # Optional additional middleware

context_schema=ExtendedState, # Optional extended state schema

checkpointer=checkpointer, # Optional state persistence

tool_configs={"write_file": True} # Optional human-in-the-loop

)The async_create_deep_agent variant supports asynchronous tools, essential for integrating with Model Context Protocol Integration servers that use async I/O.

Agent construction follows a deterministic flow: resolve language model, assemble middleware stack, construct LangGraph agent, apply system prompts, enable tool execution. This separation enables testing individual components while maintaining integration guarantees.

The instructions parameter provides domain-specific guidance prepended to the base prompt. Well-crafted instructions explain the agent’s role, quality criteria, and approach constraints. For research agents, instructions might emphasize thoroughness, source diversity, and citation requirements.

Sub-agent configuration enables specialization through focused prompts and tool subsets:

research_subagent = {

"name": "research-agent",

"description": "Deep-dive research on specific topics using web search",

"prompt": "You conduct thorough research on assigned topics. Use internet_search extensively to explore multiple perspectives. Summarize key findings with source citations.",

"tools": ["internet_search"]

}This configuration creates a sub-agent accessible via task("research-agent", "investigate quantum computing applications"). The sub-agent receives only internet_search, focusing its capabilities on information gathering rather than analysis or writing.

System Prompt Layering

DeepAgents combines multiple prompt layers into the final system prompt:

User Instructions: Domain-specific guidance from the instructions parameter, describing the agent’s role and approach.

Base Agent Prompt: Framework-level guidance about using planning tools, delegating to sub-agents, and managing context efficiently.

Middleware Prompts: Specialized instructions from middleware components, explaining how to use their tools and state. Planning middleware adds guidance about creating task lists, filesystem middleware explains file operations, sub-agent middleware describes delegation patterns.

This layering enables extending agent capabilities without rewriting base prompts. New middleware can inject additional guidance while existing prompts remain unchanged. The architecture mirrors how Prompt Engineering patterns compose - building complex agent behaviors through layered, focused instructions rather than monolithic prompts.

Middleware modifies prompts through the modify_model_request() hook, which receives the current prompt and returns a modified version. This enables dynamic prompt construction based on state - adjusting guidance based on available tools, current task context, or resource constraints.

Human-in-the-Loop Workflows

The tool_configs parameter enables human approval workflows for sensitive operations:

agent = create_deep_agent(

tools=[write_file, delete_file, execute_code],

instructions="You manage system resources.",

tool_configs={

"delete_file": {

"allow_accept": True, # Approve as proposed

"allow_respond": True, # Reject with feedback

"allow_edit": False # Cannot modify deletion

},

"execute_code": True # All approval options enabled

},

checkpointer=checkpointer # Required for state persistence

)When an agent calls a configured tool, execution pauses with an interrupt containing the proposed action. The application can present this to humans for approval, enabling workflows where AI proposes actions but humans maintain control.

Resumption options include:

- Accept: Execute the tool call as proposed

- Edit: Modify tool parameters before execution

- Respond: Reject the action and provide feedback to the agent

This pattern enables deployment scenarios where agents automate routine decisions but escalate consequential actions to humans. The framework manages the interrupt-resume cycle, maintaining state consistency across pauses.

Integration with Research Patterns

DeepAgents implements infrastructure for patterns observed in Multi-Agent Research Systems and Open Deep Research:

Progressive Task Decomposition: The planning tool enables Progressive Research Exploration where agents create initial task lists, execute first steps, reflect on findings, then refine their plans based on discoveries. This iterative planning prevents premature commitment to approaches that might not fit emerging information.

Parallel Research Workers: Sub-agent delegation supports the worker pattern from Research Workflow Architecture where multiple specialized agents explore different subtopics simultaneously. The main agent decomposes a research question into focused subtopics, spawns sub-agents for each, then synthesizes their findings.

ReAct Integration: DeepAgents naturally supports ReAct Agent Pattern through its tool-calling infrastructure. Agents interleave reasoning about what information they need with tool execution to gather that information, using observations to inform subsequent reasoning. The planning tool makes this reasoning explicit through task lists.

Context Isolation: Sub-agent delegation implements Isolating Context architecturally. Each sub-agent operates with its own context window, preventing Context Clash where unrelated information interferes with focused reasoning. The main agent sees only sub-agent summaries, not their full reasoning traces.

MCP Integration: The framework supports Model Context Protocol Integration through tool registration. MCP servers expose capabilities as tools that DeepAgents can discover and invoke through its standard tool-calling interface. The async variant supports MCP servers using asynchronous I/O.

Advanced Features

Prompt Caching: The Anthropic middleware enables automatic prompt caching for repeated system prompt prefixes. This dramatically reduces latency and cost for agents making multiple LLM calls with similar context, particularly valuable in research workflows with iterative refinement.

Conversation Summarization: The summarization middleware automatically compresses long conversation histories, preventing token limits from constraining agent capability. This implements Caching Context strategies where essential information persists while verbose history gets compressed.

Streaming Support: DeepAgents integrates with LangGraph’s streaming capabilities, enabling progressive disclosure of agent reasoning and results. Applications can display intermediate states as agents work, improving perceived performance and enabling early feedback.

Checkpointing: State persistence through LangGraph checkpointers enables pausing and resuming agent execution. This supports long-running tasks exceeding practical timeout limits, human-in-the-loop workflows requiring approval, and failure recovery from the last successful checkpoint.

Observability: The framework provides visibility into agent execution - which tools were called, what state looked like at each step, where time was spent. This debugging capability proves crucial for non-deterministic workflows where behavior varies across runs.

Production Considerations

Cost Management: Explicit middleware structure enables cost tracking per component. You can measure token consumption from planning, filesystem, and sub-agent middleware separately, identifying optimization opportunities. Heterogeneous Model Strategies can reduce costs by using faster, cheaper models for routine tasks like summarization while reserving expensive models for complex reasoning.

Error Handling: Robust error handling becomes critical when coordinating multiple autonomous agents. Failure modes include agents pursuing unproductive paths, context window exhaustion, API timeouts, and coordination failures between agents. The framework needs graceful degradation - producing useful partial results when some components fail.

Testing: Deterministic workflow paths through middleware are testable with traditional methods - specific inputs produce specific state transitions. Non-deterministic LLM outputs require evaluation approaches like LLM-as-Judge, but the middleware structure makes clear what to test at each layer.

Deployment: DeepAgents supports multiple deployment models - local execution for development, cloud deployment for production, and integration with LangSmith for monitoring. This flexibility enables consistent development and production experiences.

Comparison with Alternatives

Pure Tool-Calling: Basic agents that call tools in a loop lack planning, delegation, and state management. They work for simple tasks but fail on complex workflows requiring decomposition. DeepAgents adds the planning and coordination layer that pure tool-calling lacks.

AutoGPT-style Agents: Fully autonomous agents like AutoGPT give models complete control over their actions. This provides maximum flexibility but minimal predictability. DeepAgents balances autonomy with developer control through middleware configuration and sub-agent specialization.

Workflow Engines: Generic workflow engines like Temporal or Airflow manage arbitrary tasks but lack AI-specific features. DeepAgents provides LLM primitives - prompt management, token tracking, streaming, conversation memory - that generic workflow engines don’t offer.

LangGraph Directly: DeepAgents builds on LangGraph Workflows but adds opinionated patterns for planning, delegation, and state management. Teams can use LangGraph directly for maximum flexibility or use DeepAgents for standardized agent architectures with less configuration.

Implementation Example

A minimal research agent demonstrates core patterns:

from deepagents import create_deep_agent

from tavily import TavilyClient

tavily_client = TavilyClient(api_key=os.environ["TAVILY_API_KEY"])

def internet_search(

query: str,

max_results: int = 5,

topic: Literal["general", "news", "finance"] = "general",

include_raw_content: bool = False,

):

"""Run a web search to find information"""

return tavily_client.search(

query,

max_results=max_results,

include_raw_content=include_raw_content,

topic=topic,

)

research_subagent = {

"name": "research-agent",

"description": "Conducts deep research on specific topics",

"prompt": "You are a thorough researcher. Use internet_search to explore topics from multiple angles. Cite sources for key claims.",

"tools": ["internet_search"]

}

agent = create_deep_agent(

tools=[internet_search],

instructions="You are an expert researcher who coordinates investigations by delegating to specialized research agents.",

subagents=[research_subagent]

)

result = agent.invoke({

"messages": [{"role": "user", "content": "What is LangGraph and how does it compare to other agent frameworks?"}]

})This creates a two-level hierarchy: the main agent coordinates research by delegating to a specialized research sub-agent. The sub-agent explores the topic using internet_search, while the main agent synthesizes findings. The middleware automatically adds planning tools, filesystem tools, and delegation capabilities.

Architectural Philosophy

DeepAgents embodies several design principles for agent frameworks:

Middleware Composition: Complex capabilities emerge from composing focused middleware components rather than monolithic implementations. This mirrors The Lego Approach for Building Agentic Systems - building sophisticated systems from interchangeable pieces.

Explicit State Management: Rather than maintaining everything in conversation history, structured state schemas separate concerns. Planning state for task tracking, filesystem state for results, message state for conversation - each serves a clear purpose.

Hierarchical Delegation: Complex tasks decompose through delegation to specialized sub-agents with context isolation. This implements patterns from Multi-Agent Research Systems where parallel exploration outperforms sequential investigation.

Layered Prompting: System prompts layer domain instructions, framework guidance, and middleware-specific instructions. This composition enables extending agent capabilities without rewriting base prompts.

Infrastructure for Patterns: The framework provides infrastructure implementing common patterns from Research Agent Patterns - planning through task lists, delegation through sub-agents, persistence through virtual files. These capabilities become standardized rather than requiring custom implementation per agent.

DeepAgents demonstrates how agent frameworks can provide sophisticated capabilities through composable architecture while maintaining flexibility for specialized requirements. The middleware pattern enables extending capabilities without modifying core code, while sub-agent delegation enables hierarchical task decomposition at scale.